What is Regression Analysis?

The Essential Guide to Understanding Regression in Statistics

In this article

- What is Regression Analysis?

- Types of Regression Analysis

- Simple Linear Regression

- Multiple Linear Regression

- Advanced Regression Techniques

- Key Concepts and Assumptions

- Practical Applications of Regression Analysis

- Common Questions About Regression

- Conclusion

What is Regression Analysis?

Think of regression analysis as your data-driven crystal ball. It's a statistical technique that allows you to dive deep into the relationships between variables and make educated predictions about the future. In essence, regression is the art and science of examining how one variable, the dependent variable (let's call it Y), changes when another variable, the independent variable (X), does its thing. But unlike simple correlation, which just tells you that two things move together, regression analysis goes a step further - it quantifies the exact nature of their relationship and even gives you a formula to predict Y when you know X.

Imagine you're a marketer (or a savvy business owner), and you want to know how much your sales will increase if you bump up your advertising budget. By running a regression analysis, you could figure out just how much more money that extra ad spend might bring in. This predictive power makes regression a staple tool in fields like economics, finance, and even in health sciences where it's used to predict outcomes based on patient data.

But here's where things get interesting - regression is not just about numbers. It's about insights. With the right data and a well-fitted model, regression analysis can uncover hidden trends, expose underlying patterns, and help you make decisions with confidence. Want to learn more about how regression works under the hood? Check out Investopedia's guide on Regression Analysis and dive deeper into the math and logic behind it.

Remember, the real power of regression analysis comes when you combine data with creativity - think like a data scientist, but act like a storyteller. This is your chance to turn numbers into narratives and make data-driven decisions that propel your business forward. So, whether you're forecasting sales, predicting customer behavior, or just trying to understand the world a little better, regression analysis is your go-to tool.

Why Regression Analysis is Important:

Regression analysis isn't just a tool - it's your roadmap to making data-driven decisions that are both insightful and actionable. By understanding the relationships between variables, regression helps you see the big picture and navigate complex business or research landscapes with confidence. Here's why it's so crucial:

-

Data-Driven DecisionsRegression analysis allows you to predict future trends by analyzing historical data. Whether it's forecasting sales, budgeting, or resource allocation, regression equips you with the insights to make informed decisions that drive success. Learn more about how regression drives data-driven strategies.

-

Understanding RelationshipsWith regression, you can identify how variables interact and influence one another. For instance, you can determine how marketing spend impacts sales, or how employee training affects productivity. This understanding helps in optimizing strategies and identifying key drivers of performance.

-

Optimization and EfficiencyBy pinpointing which variables have the most significant impact, regression analysis enables you to optimize processes and allocate resources more effectively. This means you can streamline operations, reduce costs, and improve overall efficiency.

-

Mitigating RisksUnderstanding potential risks and their predictors through regression analysis allows you to proactively manage and mitigate those risks. This is particularly valuable in finance, healthcare, and project management, where anticipating and addressing potential pitfalls can save time and resources.

-

Enhancing Strategic PlanningRegression analysis provides a foundation for strategic planning by offering insights into trends and relationships that might not be immediately obvious. By leveraging these insights, you can develop long-term strategies that are aligned with your organizational goals.

Types of Regression Analysis

Regression analysis isn't a one-size-fits-all solution - it's a toolbox filled with different types of models, each designed to answer specific questions and handle various kinds of data. Whether you're predicting outcomes, uncovering relationships, or optimizing processes, there's a type of regression analysis that's just right for the job. Below, we'll explore some of the most common types of regression, breaking down when and why to use each one. Think of this as your quick guide to choosing the right tool for the task at hand.

| Type of Regression | Description | When to Use |

|---|---|---|

| Simple Linear Regression | This is the most straightforward type of regression, analyzing the relationship between one independent variable and one dependent variable. It's like drawing a straight line through your data to see how one thing changes with another. | Use this when you have a single predictor and you're looking to understand or predict its impact on an outcome. Perfect for scenarios like predicting sales based on ad spend or estimating home prices based on square footage. |

| Multiple Linear Regression | When life (and data) gets more complicated, multiple linear regression steps in. It lets you analyze the impact of two or more independent variables on a single dependent variable, giving you a more nuanced view of what drives outcomes. | Ideal for understanding complex relationships where multiple factors are at play - like determining how education level, work experience, and hours worked affect salary. If you need to factor in multiple variables, this is your go-to. |

| Logistic Regression | Not all outcomes are numbers - sometimes you're predicting categories (like yes/no, success/failure). Logistic regression is your tool for binary outcomes, using a logistic function to model probabilities. | Use this when your dependent variable is categorical. For example, predicting whether a customer will buy a product or not based on demographic and behavioral data. If your question is "Will it happen or not?" logistic regression has the answer. |

| Polynomial Regression | Life isn't always linear, and neither is your data. Polynomial regression allows for curve-fitting by including polynomial terms in your model. It's like adding some flexibility to that straight line to better capture the reality of your data. | Best used when your data shows a clear curvilinear relationship - such as modeling the growth rate of a company over time or predicting sales peaks and troughs. When a straight line just won't cut it, go polynomial. |

| Ridge and Lasso Regression | When your independent variables are highly correlated (a problem known as multicollinearity), ridge and lasso regression come to the rescue. They apply regularization techniques to help you build more reliable models by penalizing large coefficients. | Use ridge and lasso when you're dealing with multicollinearity or when you need to reduce the complexity of your model by selecting the most important predictors. These methods are particularly useful in high-dimensional data, such as in machine learning applications. |

Choosing the right type of regression analysis is crucial for extracting the most meaningful insights from your data. Whether you're a data scientist, a business analyst, or just someone looking to make sense of numbers, knowing which tool to use and when can make all the difference.

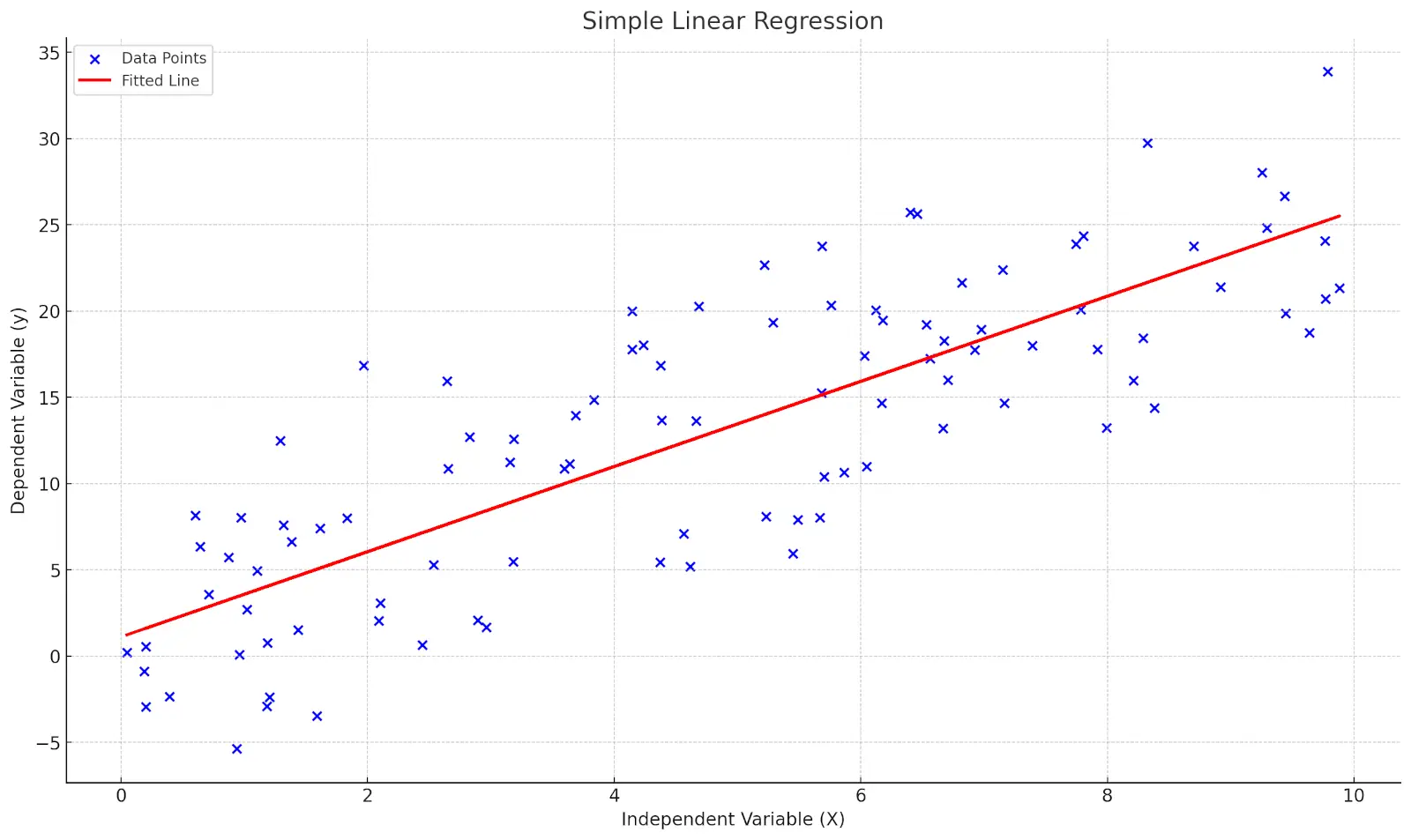

Simple Linear Regression

Imagine you're trying to understand how one thing affects another - like how the amount of money you spend on advertising impacts your sales. Simple linear regression is your go-to tool for this. It's all about exploring the relationship between a single independent variable (X) and a dependent variable (Y), and finding the best-fitting line that connects them. This line is more than just a trend - it's a mathematical model that helps you make predictions.

-

The Regression EquationThe heart of simple linear regression is its equation:

Y = a + bX + error

- Y: The dependent variable (what you're trying to predict)

- X: The independent variable (the predictor)

- a: The Y-intercept (where the line crosses the Y-axis)

- b: The slope (how much Y changes for every unit increase in X)

This equation is your crystal ball - plug in a value for X, and out pops your predicted Y. -

How It WorksThe goal of simple linear regression is to find the line that best fits your data points, minimizing the distance between the actual data points and the predicted values (this distance is known as the "residual"). The smaller the residuals, the better your model fits the data.

Want to see how this plays out in practice? Try plotting your data points on a graph, draw a line through them, and see how closely they align - congratulations, you've just visualized simple linear regression! -

Real-World ExampleLet's say you run a company and want to predict your sales based on your advertising budget. By collecting data on past advertising spends and corresponding sales, you can use simple linear regression to determine the relationship between these two variables. Once you've got your regression equation, you can predict future sales based on different levels of ad spend. It's a straightforward but powerful way to turn data into decisions.

Curious about the math behind this? Explore this guide on simple linear regression to dive deeper. -

Why It MattersSimple linear regression is a foundational tool in data analysis and business strategy. It's easy to implement but incredibly insightful, helping you understand key relationships in your data. Whether you're predicting future trends, optimizing budgets, or simply making sense of numbers, this technique gives you a solid starting point.

Multiple Linear Regression

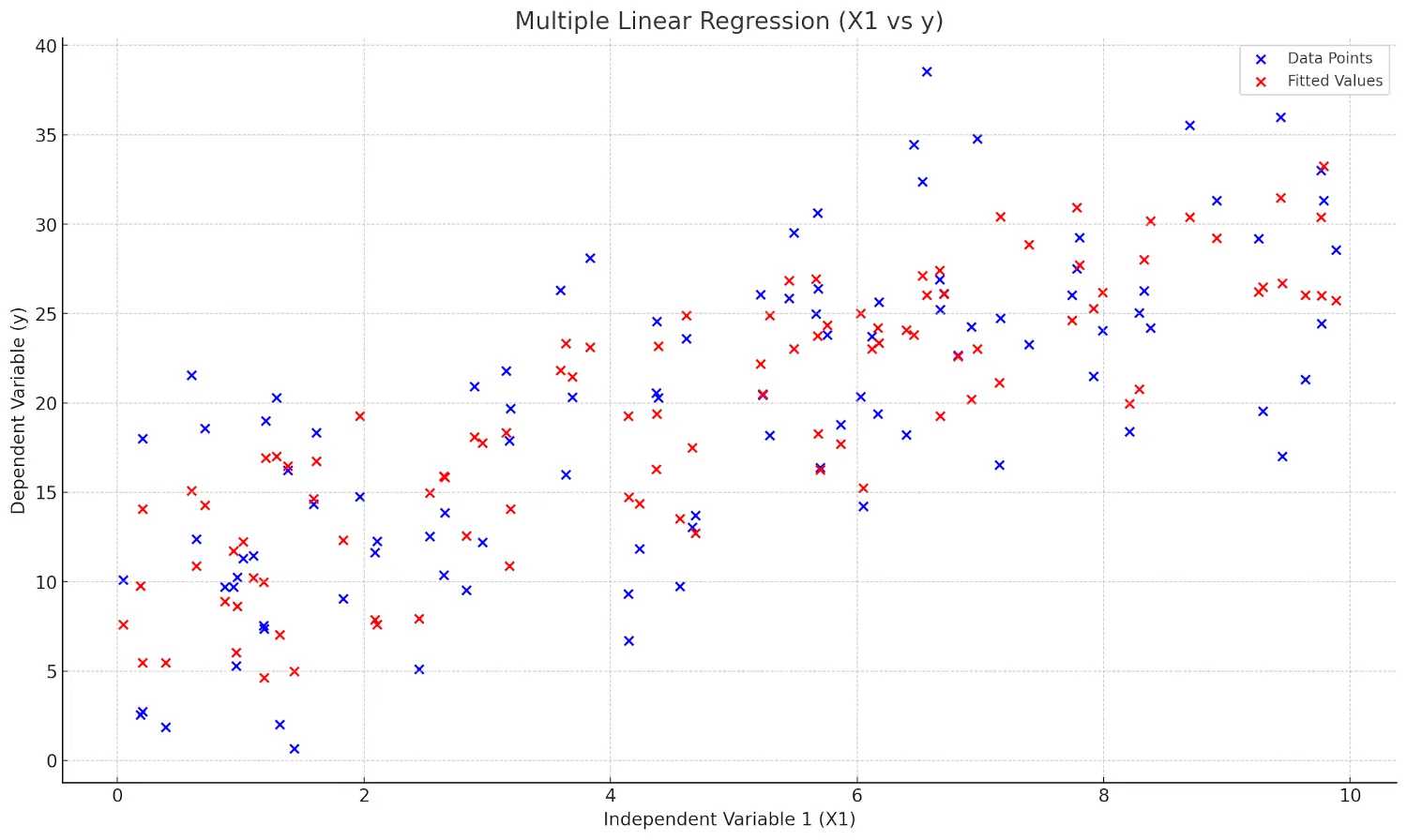

When life gets more complex, so does our data. Enter multiple linear regression - a powerful extension of simple linear regression that allows you to consider multiple independent variables simultaneously. Imagine you're not just predicting sales based on ad spend, but also factoring in social media activity, seasonality, and economic indicators. Multiple linear regression is your tool for untangling these complex webs of influence and getting to the heart of what drives your outcomes.

-

The Regression EquationThe equation for multiple linear regression builds on the simplicity of the single-variable model, but adds layers of depth:

Y = a + b1X1 + b2X2 + ... + bnXn + error

- Y: The dependent variable (the outcome you're predicting)

- X1, X2, ..., Xn: The independent variables (the predictors)

- a: The Y-intercept (the starting point of your line on the Y-axis)

- b1, b2, ..., bn: The coefficients (showing the impact of each predictor)

Each coefficient tells a story about the relationship between its variable and the outcome, controlling for all the other variables in the model. -

How It WorksMultiple linear regression doesn't just fit a line to your data - it fits a multidimensional plane (or hyperplane, if you want to get technical). This plane balances all the independent variables, finding the best fit that minimizes the error across all dimensions. It's like juggling, but with data, ensuring every variable gets its due consideration.

For those who want to visualize this, imagine adjusting the height of a surface until it touches as many data points as possible across multiple axes. That's the magic of multiple linear regression. -

Real-World ExampleLet's say you're in the real estate business, trying to predict house prices. It's not just about size (square footage), but also location, age of the property, number of bedrooms, and maybe even proximity to schools or public transport. By collecting data on all these factors, multiple linear regression can help you build a model that predicts house prices with much greater accuracy than considering any one factor alone.

-

Why It MattersMultiple linear regression is the workhorse of predictive analytics. It's not just about seeing how one thing affects another, but how multiple factors work together to influence an outcome. Whether you're analyzing market trends, evaluating product features, or optimizing operations, this method gives you the comprehensive view you need to make informed decisions and drive success.

Advanced Regression Techniques

Sometimes, your data throws you a curveball - or maybe several. When simple linear models just won't cut it, it's time to bring out the advanced tools. These techniques help you capture complex relationships, manage multicollinearity, and make accurate predictions in scenarios where the basic methods fall short. Let's dive into a few key players in the advanced regression toolbox:

-

Polynomial RegressionNot all relationships are linear, and that's where polynomial regression shines. By adding polynomial terms to your model (like X² or X³), you can capture curves and bends in your data, allowing for more accurate modeling of non-linear relationships. It's like upgrading from a straight ruler to a flexible curve ruler, giving you more precision in predicting complex outcomes.

-

Ridge and Lasso RegressionWhen your independent variables are a little too cozy (a.k.a. multicollinearity), it can mess with your model's accuracy. Ridge and Lasso regression tackle this issue head-on. Ridge regression adds a penalty to large coefficients, while Lasso not only penalizes but can also shrink coefficients to zero, effectively selecting a simpler model. These techniques are invaluable when you're dealing with a lot of predictors and need to streamline your model.

-

Logistic RegressionNot all outcomes are continuous - sometimes you're predicting categories, like whether a patient will develop a disease (yes/no). Logistic regression is your go-to for binary outcomes, using an S-shaped curve to model probabilities. It's like flipping a coin, but with a lot more data backing up your prediction.

Curious about how this works in practice? Explore more about logistic regression here.

Real-World Example

In the healthcare sector, logistic regression is often used to predict patient outcomes based on risk factors like age, weight, and medical history. By analyzing these variables, healthcare providers can better assess the likelihood of disease and take preventive action.

Key Concepts and Assumptions

Before you start fitting models and making predictions, it's crucial to ensure that your analysis rests on solid ground. Key concepts and assumptions in regression analysis are the bedrock that keeps your findings reliable and your conclusions trustworthy. Let's break down what you need to know:

-

LinearityRegression assumes that the relationship between the independent and dependent variables is linear. If your data doesn't follow a straight line, you might need to reconsider your model or apply transformations to better fit the data.

-

IndependenceEach observation in your data should be independent of the others. If your data points are related (like in time series data), you'll need to adjust your model to account for these dependencies, or else your results might be biased.

-

HomoscedasticityThis fancy term means that the variance of the errors (residuals) should be consistent across all levels of the independent variables. If you see a "funnel" shape in your residuals plot, that's a sign of heteroscedasticity, which can affect the validity of your model.

-

NormalityFor your model's predictions to be valid, the residuals should be normally distributed. This assumption is key for conducting hypothesis tests and creating confidence intervals. If normality is violated, consider transforming your data or using a non-parametric approach.

Important Metrics:

| Metric | Description | Why It Matters |

|---|---|---|

| R-squared | R-squared represents the proportion of variance for the dependent variable that's explained by the independent variables. | A higher R-squared indicates a better fit for the model, showing how much of the outcome's variation is explained by your predictors. It's a key measure of model performance. |

| P-value | The p-value tests the significance of each coefficient in your model, indicating whether the predictor is likely having a real impact on the outcome. | P-values help you decide which predictors to keep in your model. A low p-value (<0.05) suggests the predictor is statistically significant and should be included. |

| Standard Error | The standard error measures the average distance that the observed values fall from the regression line, indicating the accuracy of your predictions. | Lower standard errors mean more precise predictions, making this metric essential for assessing the reliability of your model. |

Pro Tips for Regression Analysis:

- Always check assumptions before interpreting results. A model that violates these assumptions can lead to misleading conclusions.

- Use diagnostic plots like residual plots, Q-Q plots, and scatterplots to visually assess how well your model fits the data and whether any assumptions have been violated.

- If you spot issues like non-linearity or heteroscedasticity, consider transforming your variables or exploring different types of regression models that might better suit your data.

Practical Applications of Regression Analysis

Regression analysis isn't just a statistical tool - it's a cornerstone of decision-making across countless industries. From predicting future trends to optimizing strategies, regression offers a way to make data-driven choices with confidence. Let's take a closer look at how different sectors are leveraging this powerful technique:

| Field | Applications | Examples |

|---|---|---|

| Business and Economics |

|

Companies use regression analysis to predict future sales based on past performance and external factors like economic indicators. By understanding these relationships, businesses can optimize their marketing spend, set competitive prices, and plan for future growth. For instance, regression can help identify which marketing channels yield the highest return on investment (ROI), guiding strategic allocation of advertising budgets. |

| Healthcare |

|

Healthcare providers use regression to predict outcomes such as recovery times or the likelihood of developing certain conditions based on patient history and lifestyle. This helps in tailoring treatment plans and improving patient care. For example, regression models can predict the risk of heart disease by analyzing patient data on diet, exercise, and genetic factors, enabling early intervention. |

| Social Sciences |

|

Researchers might use regression analysis to explore how different socioeconomic factors contribute to educational outcomes, or how income levels influence overall well-being. These insights can guide policy-making and educational reforms. For instance, regression can be used to study the correlation between public health spending and life expectancy, helping governments allocate resources more effectively. |

| Environmental Science |

|

Environmental scientists use regression analysis to understand and predict the effects of human activities on the environment. For example, regression models can be used to predict how changes in temperature and precipitation patterns will affect crop yields, guiding agricultural practices and food security planning. |

| Engineering |

|

Engineers apply regression analysis to predict system performance and optimize designs. For example, regression models can forecast when machinery is likely to fail based on usage data, allowing for preventative maintenance and reducing downtime. Similarly, regression can help in optimizing the design of new products by understanding how different material properties affect performance. |

Example

Consider a study where regression analysis is used to explore the impact of education level and work experience on salary. This approach helps organizations set equitable pay scales by quantifying how much each additional year of education or experience contributes to salary increases.

Common Questions About Regression

Regression analysis is a powerful tool, but it often raises questions, especially for those new to the field. Below are answers to some of the most frequently asked questions about regression analysis:

What is the difference between correlation and regression?

While both correlation and regression analyze relationships between variables, they serve different purposes. Correlation measures the strength and direction of a relationship between two variables, but it does not imply causation. Regression, on the other hand, not only measures the relationship but also models how one variable predicts another, offering insights into causality.

When should I use linear regression versus logistic regression?

Use linear regression when your dependent variable is continuous (e.g., predicting sales, revenue, or temperature). Use logistic regression when your dependent variable is binary or categorical (e.g., predicting whether a customer will buy a product or not, or whether a patient will develop a disease).

How do I check if my data is suitable for regression analysis?

Before performing regression analysis, ensure that your data meets key assumptions such as linearity, independence, homoscedasticity, and normality. You can use diagnostic plots like residual plots, Q-Q plots, and scatterplots to visually assess these assumptions. If the assumptions are violated, consider transforming your data or using a different model.

What does R-squared tell me about my regression model?

R-squared, or the coefficient of determination, indicates how much of the variance in the dependent variable is explained by the independent variables in your model. A higher R-squared value means a better fit, but it's important to consider other factors like the complexity of the model and the presence of outliers.

Can I use regression analysis for time series data?

Yes, regression analysis can be applied to time series data, but it requires special considerations. For example, you need to account for autocorrelation (when current values are correlated with past values). Time series regression models, such as ARIMA (AutoRegressive Integrated Moving Average), are specifically designed for this purpose.

How can I avoid overfitting in my regression model?

Overfitting occurs when your model is too complex and captures noise rather than the true underlying relationship. To avoid overfitting, use techniques such as cross-validation, simplifying your model by reducing the number of predictors, or applying regularization methods like Ridge or Lasso regression.

What is multicollinearity and how do I handle it?

Multicollinearity occurs when independent variables in a regression model are highly correlated with each other, which can distort the coefficients and make the model unreliable. You can detect multicollinearity using the Variance Inflation Factor (VIF). To handle it, consider removing or combining correlated variables, or using Ridge or Lasso regression, which can mitigate the effects of multicollinearity.

Why might my regression model have a low R-squared but still be useful?

A low R-squared value indicates that the model explains only a small portion of the variance in the dependent variable. However, the model can still be useful if it provides statistically significant predictors, helps understand relationships between variables, or if the low R-squared is expected due to the nature of the data (e.g., in studies with high variability).

How can I interpret the coefficients in my regression model?

In a linear regression model, coefficients represent the change in the dependent variable for a one-unit change in the independent variable, holding all other variables constant. Positive coefficients indicate a direct relationship, while negative coefficients indicate an inverse relationship. In multiple regression, the interpretation remains the same, but it's important to consider the context and potential interactions between variables.

Can regression analysis be used for forecasting?

Yes, regression analysis is widely used for forecasting in fields like finance, economics, and business. By analyzing historical data, regression models can predict future trends. However, the accuracy of forecasts depends on the quality of the data and the validity of the assumptions behind the model.

What is the purpose of regularization in regression analysis?

Regularization techniques like Ridge and Lasso regression add a penalty to the coefficients of the model to prevent overfitting and improve generalization to new data. Ridge regression penalizes the sum of the squared coefficients, while Lasso regression penalizes the sum of the absolute coefficients, which can also lead to variable selection by shrinking some coefficients to zero.

These answers provide a deeper understanding of regression analysis, helping you navigate common challenges and apply this powerful tool more effectively in your work.

Conclusion

Regression analysis is a versatile and powerful tool for analyzing relationships between variables and making predictions. Whether you're a business analyst, healthcare researcher, or social scientist, understanding how to properly apply and interpret regression techniques is essential for making data-driven decisions.

For more insights and resources, explore other topics on SuperSurvey such as Survey Research Design, Quantitative Data, and Statistical Significance.