Survey Questions

100+ Examples and Cheatsheet Tips

In this article

- Examples of good survey questions?

- Examples of bad survey questions?

- Aims & Reponse Bias

- See What's Been Done Before

- Choose Your Question Type

- How Many Questions Should a Survey Have

- How to Order Questions

- How to Phrase Survey Questions

- Ensure Variety in Options

- Keep It Focussed

- Avoid Repetition

- Keep Things Flexible

- Pilot

- Types of Survey Questions

Writing good survey questions is an important part of survey research to help make sure that you avoid response bias. How you construct your survey questions will depend on what your research objectives are, and how much detail you would like to obtain.

Examples of good survey questions

The following examples illustrate well-written survey questions that avoid common pitfalls in question design. These questions are clear, specific, and unbiased, allowing respondents to provide accurate and valuable feedback. The examples draw on best practices from sources like AIFS, Pew Research, and NCBI.

| Good Question | Why It's Good |

|---|---|

| How would you rate your overall satisfaction with our customer service? | This question is neutral and does not assume any level of satisfaction, allowing for an unbiased response. |

| What is your opinion on the government's new healthcare policy? | It asks for an opinion on a specific topic, making it easier for respondents to provide focused feedback. |

| Which of the following fruits do you prefer? (Select all that apply: Apple, Banana, Orange, Grape, Peach, None of the above) | This question provides a comprehensive range of options, including 'None of the above', ensuring that all preferences can be accurately captured. |

| Which online payment method do you use most frequently? | This question is directly relevant to the respondent's experiences and seeks specific information, making it valuable for analysis. |

| Do you believe the new policy will positively affect environmental conservation efforts? | Clearly structured to address a specific aspect of the policy, leading to more precise and insightful responses. |

| How likely are you to recommend our product to a friend or colleague? | This question is clear and straightforward, allowing respondents to provide an honest and measurable response. |

| What are the top three features you value in a smartphone? | It asks for specific preferences, making the feedback more actionable for product development. |

| How often do you use our service? | This question is specific and directly related to the respondent's behavior, providing useful data for service optimization. |

| What improvements would you suggest for our website? | This open-ended question invites detailed feedback, allowing respondents to express their opinions fully. |

| How would you rate the quality of our product? | It directly addresses product quality without leading or biasing the respondent. |

| Which of the following best describes your experience with our customer support? | This question provides multiple, clearly defined options, allowing for precise data collection. |

| Do you feel that our service meets your expectations? | It is direct and focused on expectations, offering valuable insights into customer satisfaction. |

| What is the primary reason for your visit today? | This question is specific and targeted, helping to understand the respondent's immediate needs or motivations. |

| How would you describe your overall experience with our product? | This open-ended question allows for a comprehensive response, capturing the nuances of the user's experience. |

| What do you like most about our service? | It encourages positive feedback while remaining neutral and unbiased. |

| What challenges have you encountered while using our product? | This question directly seeks out potential issues, providing actionable insights for improvement. |

| How does our product compare to others you have used? | This question invites comparative feedback, which can be valuable for understanding market positioning. |

| What additional features would you like to see in our product? | It asks for constructive input on product development, inviting innovation and improvement. |

| How well does our product meet your needs? | This question is direct and focused on functionality, providing clear insights into product performance. |

| How easy was it to find the information you were looking for on our website? | This question is specific and focused on the user experience, making the feedback actionable for improving website usability. |

For more guidance on writing effective survey questions, refer to resources from Pew Research, Oxford Academic, and the National Center for Biotechnology Information.

Examples of Bad Survey Questions

Designing survey questions requires careful consideration to avoid bias, ambiguity, and other pitfalls that can lead to unreliable or invalid results. Below is a comprehensive guide with examples of bad survey questions, drawn from authoritative sources like AIFS, Pew Research, and NCBI. These examples illustrate common mistakes and explain why each question is problematic.

| Bad Question | Why It's Bad |

|---|---|

| How satisfied are you with the excellent customer service provided by our company? | Assumes that the customer service is excellent, which can lead to biased responses and does not allow respondents to express dissatisfaction. |

| How do you feel about the government's actions? | Too vague, as it does not specify which actions are being referred to, leading to varied and unhelpful responses. |

| What is your favorite fruit? (Options: Apple, Banana, Orange) | Limited answer choices do not cover all possible preferences, leading to incomplete data. |

| Have you ever shopped online using a computer, laptop, tablet, smartphone, or any other electronic device? | Redundant, as shopping online inherently requires the use of an electronic device, making this question unnecessary. |

| Do you think the new policy will affect things? | Too broad and unclear, as it does not specify what "things" or which "new policy" is being referred to, leading to ambiguous responses. |

| Do you agree that our product is the best on the market? | Leading question that encourages respondents to agree, rather than providing an unbiased opinion. |

| Do you think our customer service team could be more helpful? | Contains a negative assumption, which might lead respondents to answer more negatively than they would otherwise. |

| How often do you enjoy our services? | Assumes the respondent enjoys the services, which can bias responses and does not capture negative experiences. |

| On a scale of 1-10, how amazing was your experience? | Uses exaggerated language ("amazing") that can lead to biased responses, rather than an objective assessment. |

| Wouldn't you agree that our new feature is beneficial? | Leading question that pushes respondents toward agreeing, thus not capturing true opinions. |

| How much do you love our product? | Assumes positive feelings, leading to biased responses that do not accurately reflect the range of customer experiences. |

| Why do you dislike our competitors? | Loaded question that assumes respondents dislike competitors, which can lead to biased and unreliable data. |

| How often do you feel our service is subpar? | Negative framing can lead respondents to focus on negative aspects, rather than providing a balanced view. |

| Do you think our product is better than others? | Assumes the respondent has experience with other products and introduces comparison bias. |

| Wasn't the event venue fantastic? | Leading question that assumes the venue was positively received, not allowing for critical feedback. |

| How satisfied are you with our product's unparalleled quality? | Assumes that the quality is unparalleled, which can influence respondents to rate it more positively. |

| How did you feel about our perfect customer service? | Uses subjective language ("perfect") that can lead to biased responses, rather than objective feedback. |

| How much do you value our exceptional offerings? | Loaded with positive language ("exceptional"), leading respondents toward a favorable response. |

| Don't you think our product is worth the price? | Leading question that pushes respondents toward agreeing, thus not capturing true opinions about the product's value. |

| Would you say our service exceeds expectations? | Assumes the service exceeds expectations, which can bias responses and does not allow for neutral or negative feedback. |

For more detailed guidance on creating effective survey questions, explore resources from Pew Research, Oxford Academic, and the National Center for Biotechnology Information.

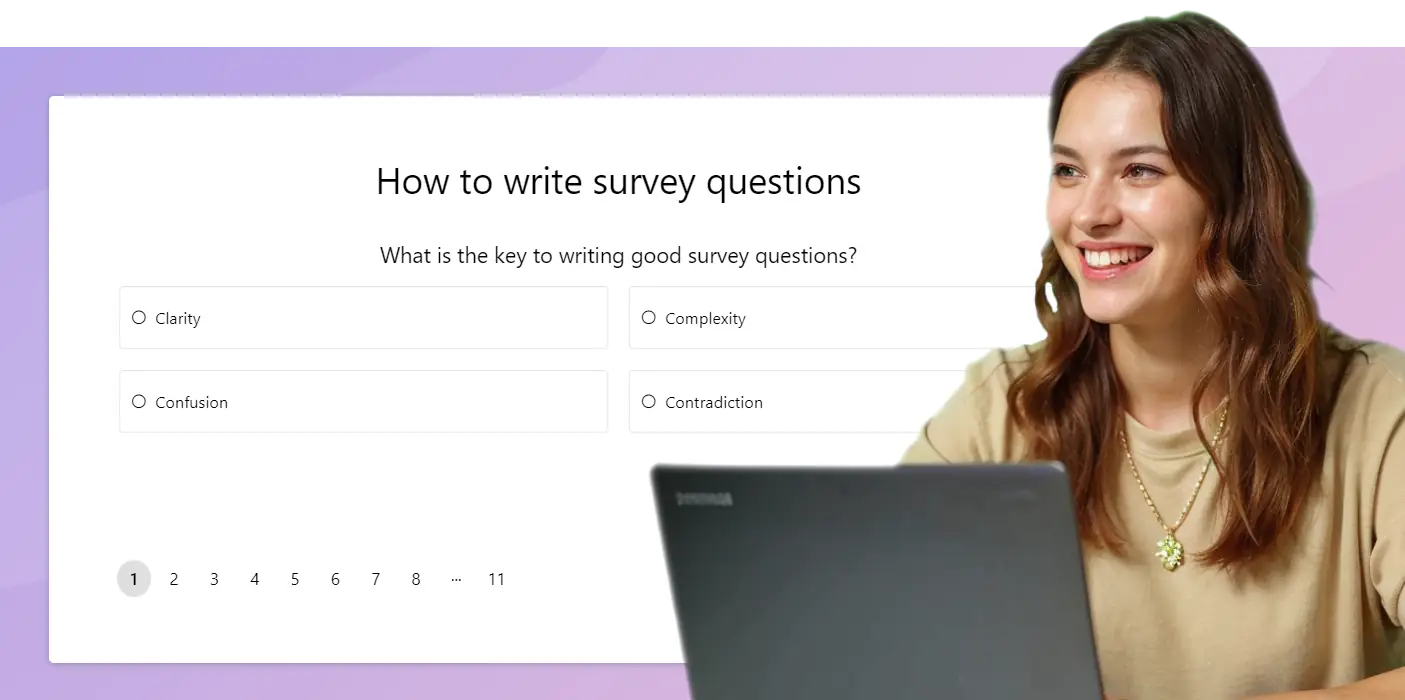

How to Write Good Survey Questions

-

Identify Your Aims and Avoid Response BiasBefore creating survey questions, define the main objective of your survey. What do you want to achieve? Who are you targeting? These factors shape the questions and help you avoid response bias. Questions must be relevant and aligned with the survey's goals to ensure accurate, actionable data. Drafting a list of your survey's aim, audience, and key points helps visualize your survey structure. This method ensures no gaps and avoids unnecessary or redundant questions.

-

See What's Been Done BeforeReviewing existing surveys can save time and improve the quality of your questions. Search for validated questions from reputable research studies. Using established questions ensures your survey collects reliable and insightful data. You can adapt them to fit your needs. Understanding how other surveys have approached similar topics can prevent common pitfalls and help you fine-tune your questions for better data collection.

-

Choose Your Question TypeThe two main types of survey questions are open-ended and closed-ended. Open-ended questions offer qualitative insights, allowing respondents to answer in their own words. Closed-ended questions provide predefined answers and are ideal for collecting quantitative data. Depending on your survey's goals, you may use both types to gather general trends and detailed feedback.

-

How Many Questions Should a Survey Have?Surveys with 10 to 30 questions tend to strike a balance between thoroughness and engagement. Longer surveys may lead to higher dropout rates, lowering data quality. Aim for brevity and relevance - each question should have a specific purpose.

-

How to Order Survey QuestionsStart with easier, general questions and then move to more specific ones. Group similar questions together and prioritize the most important ones early to capture critical data from all respondents. A logical flow makes it easier for participants to stay engaged.

-

How to Phrase Survey QuestionsClarity: Ensure questions are clear and free of ambiguity.

Neutrality: Avoid leading language to ensure unbiased responses.

Avoid Absolutes: Steer clear of words like "always" or "never," as they may not reflect real-world scenarios. -

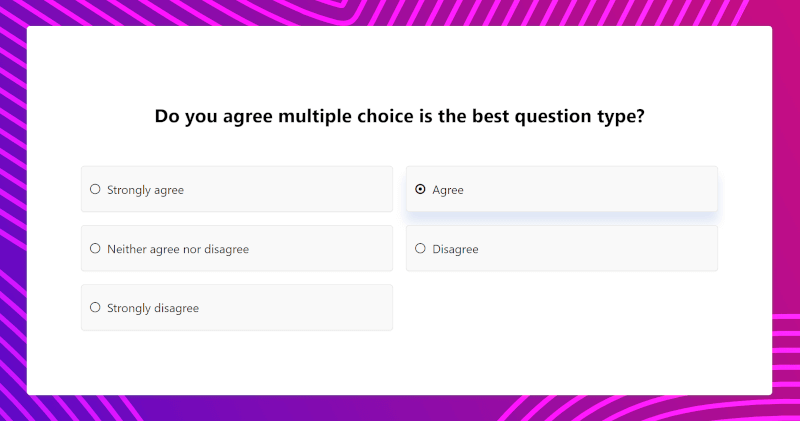

How to Write Good Answers to Multiple Choice QuestionsOffer a balanced variety of answer options in multiple-choice questions. Aim for a spectrum of responses to capture the full range of opinions. Too few options may limit insights, while too many can confuse respondents.

-

Keep It FocusedEach question should target one specific piece of information. Avoid double-barrelled questions that ask two things at once. Splitting them into separate questions ensures clarity and precision.

-

Avoid RepetitionRepeated questions can frustrate participants. Make sure the distinction between similar questions is clear. This prevents survey fatigue and ensures higher quality responses.

-

Keep Things FlexibleOffer flexibility in responses. Allow respondents to skip or opt out of specific questions if needed. Options like "Not Sure" or "Prefer Not to Answer" can improve the overall quality of your data by reducing discomfort.

-

Pilot, Pilot, PilotTesting your survey with a smaller group before launching it can help identify unclear questions or areas that might confuse participants. Piloting helps you refine your survey, ensuring it collects accurate data and provides a smooth respondent experience.

Types of Survey Questions

Survey questions can broadly be categorized as Open Ended and Closed Question types.

Closed-ended questions restrict participants to a select number of choices, providing primarily quantitative data. They are often used in confirmatory research. Use closed questions when you need standardized answers that are easy to analyze and compare.

Open-ended questions allow participants to respond freely with their own words, providing qualitative data. Use open-ended questions when you need detailed insights into thoughts, opinions, or behaviors.

| Type | Definition |

|---|---|

| Open Ended | An open-ended question is an open response-style of question, where participants can answer in as much or as little text as they choose. Use them in exploratory research for detailed qualitative data. |

| Closed | Closed-ended questions restrict participants to a select number of choices. They are often used in confirmatory research and provide quantitative data that is easy to analyze. |

| Likert Scale | A Likert scale typically uses 5, 7, or 9 options to measure how much participants agree with a statement. Use Likert scales to measure attitudes or feelings about something. |

| Multiple Choice | Multiple-choice questions provide a list of predefined answers to choose from. Use them when you need to collect structured data from a broad audience. |

| Rating Scale | Rating scale questions ask participants to rate an experience or item on a numerical scale (e.g., 1 to 5, or 1 to 10). Use rating scales to measure intensity of feelings or experiences. |

| Dichotomous | Dichotomous questions offer only two possible answers (e.g., Yes/No). Use them when you need quick, straightforward responses. |

What is the best way to format a Likert scale in a survey?

When should I use open-ended questions in a survey?

How can I improve response rates in my survey?

What is the difference between a leading question and a neutral question?

How do you choose the right number of options for a multiple-choice question?

What is the ideal number of survey questions for high response rates?

How do demographic questions improve survey accuracy?

Why should I avoid using double-barreled questions in surveys?

How can I reduce survey fatigue among respondents?

What are the most common types of survey bias?

- Selection Bias: Occurs when the sample surveyed does not represent the target population, often caused by non-random sampling.

- Response Bias: Happens when respondents answer in a way they think is expected, such as giving socially desirable answers.

- Question Wording Bias: Arises when leading or ambiguous questions influence respondents' answers.

- Non-Response Bias: Results from differences between respondents and those who choose not to participate in the survey.

What is a good response rate for an online survey?

How can I make sure my survey questions are unbiased?

What is the best way to distribute online surveys?

How can I analyze open-ended survey responses?

Why is random sampling important in survey design?

Helpful References:

- Australian Bureau of Statistics (2021). Questionnaire Design.

- Harvard University. Questionnaire Design Tip Sheet

- FAQ. Questionnaire Design